The array of transmission lines towering over vast expanse of fields, sometimes over more difficult terrain, are the markers of encroachment of electricity in a countryside life. Their humdrum backdrop during a road-trip has become such a cliché that we often chuck out their presence. Albeit, they are the lifeline of the modern human society and exemplify the long journey electricity takes to reach our homes.

Electricity is used in every aspect of our lives. Its reliability is important to support critical appliances in hospitals, operate machines in industries, and run public services efficiently.

For a country, the penetration of electricity to all households and its reliability is an important parameter that reflects the well-being of its citizens and business friendly environment.

There are several complexities in electricity market:

- Lack of Storage - Electricity storage technology is not yet at the scale to be deployed at grid level. Hence, the amount of electricity that is consumed has to be produced at the same instant.

- Inelasticity of Demand – In an event of ebbing supply, there is a surge in electricity prices. This does not have any influence over the household end-users who pay a fixed price for electricity. This Iron Curtain between the consumers and suppliers of electricity makes the end-users agnostic to any price change. In such cases, there are mechanisms to ramp up the production to meet the demand.

- Congestion – There is a technical limit to the amount of power that a wire can carry. This limits the amount of electricity that can be transmitted from one region to another.

- Transmission and Distribution Losses – The electricity that is generated by a power plant is subject to Ohmic Loss during its long journey to the end-users. This implies the power at one end of wire is not equal to the power at the opposite end.

These litany of problems make electricity market very different from other markets. There are mechanisms at place to overcome these hassles. The objective of this article is to provide a basic overview of such mechanisms. We will browse through the technology of electricity infrastructure, participants of electricity markets and the mechanism to ensure supply-demand balance.

Power Generation

Nature has provided us plethora of forms of primary energy – fossil fuels, wind energy, solar energy, etc. In its innate form, primary energy cannot be used to satisfy our needs. It has to be converted to a usable form of energy. The task of converting primary energy to secondary energy, i.e. electricity, is done by a power plant.

The power produced from multiple power plants are pooled together before it makes its long journey to household, businesses or industries. The Power Pool is an essential component of electricity infrastructure because it facilitates economies of scale. A single power producing unit is prone to operational failure. Hence, pooling ensures the security of the system by making it less dependent on a single power plant. It promotes competition which results in lower price and innovation amongst different power plants. The implication of pooling is that a consumer never receives power from a specific power plant.

As electricity demand varies every second, power plants should be able to meet this varying demand. There are certainly technical limitations, on basis of which, the power plants can be classified as:

- Baseload Power Plant - A baseload power plant can operate continuously for a long time (years) without shutting down and usually produce power at the order of 100s of MW. Since they cannot shut down or restart at a short notice, they serve the purpose of meeting average minimum demand of electricity. Coal, hydro and nuclear power plants are perfect examples of baseload power plants.

- Peaking Power Plant – A peaking power plant is used to meet the variable demand above average minimum demand. These power plants have flexibility to be started at a very short notice. Some of the examples are gas turbines, diesel powered plants and hydroelectric plants.

There are several considerations to be taken into account while balancing demand and supply:

- The cost and time required to start up or shut down a power plant. Due to technical limitations, certain power plants cannot be started or shut down frequently.

- The time required to increase the power output of a power plant.

- The cost required to produce extra MWh of electricity. This is called Marginal Cost and depends on fuel and other operational costs and does not include the sunk, amortization or depreciation costs.

Electrical Technology

The purpose of a power plant is to maintain a voltage difference across the two ends of the wire. This voltage difference incites the flow of electrons which in turn leads to the flow of current. An important point to note here is that the speed at which the current flows is almost equal to the speed of light, whereas the speed of flow of electrons is limited to few meters per second. The movement of electrons creates an electromagnetic field which is responsible for carrying power.

In a single phase 220V 60 Hz AC supply, the Root Mean Square (RMS) value of sinusoidal voltage function is 120V. The real value of the voltage oscillates between +311V to -311V sixty times a second. This variation in power output is extraneous to household applications. However, some industrial equipment involving high power motors or welding are susceptible to such variations and require 3-phase AC connection.

The electricity that comes out of a power plant is 3 phase alternating current. 3 phase AC current is just a superimposition of 3 single sinusoidal voltage functions with the phase difference of 120 degrees. The power output in this case is more even.

We could well have used DC supply where the power output is essentially constant. However, there are other limitations to DC which makes it less viable:

- The electricity produced by a power plant is in the form of Alternating Current. Hence, conversion to DC would be an extra step.

- DC cannot be stepped up cheaply which is compulsory for avoiding power loss while transmitting it over a long distance.

However, recent developments in High Voltage DC transmission capabilities are turning the tides for DC current.

System Frequency

The frequency of the system is of utmost importance in balancing the supply and demand. It is necessary for the frequency to be maintained at 50 Hz (may differ in some countries) for the electrical system to work coherently. Power generators are designed to operate at a very narrow band of frequency (1% variability) and there are controls to disengage the plant from the grid if the frequency surpasses the safe operational limit. Hence, the frequency beyond the tolerance level may have a knock-on effect and may eventually lead to blackout.

The rate at which the frequency of the system changes due to difference in supply and demand depends on the inertia (net supply and load) of the system.

The frequency of the system is an important parameter that is constantly being monitored by the System Operator (SO).

Electricity Infrastructure

As the power plants are located far away from the residential or industrial region, they have to be transmitted across a long distance. While doing so, transmission losses has to be factored in. To minimize the transmission losses in form of heat in the wires, the voltage is stepped up to 100kV. The transmission line takes the power to substation where electricity from other power plants are pooled together and the voltage is stepped down to 10kV. The bus in the substation re-routes the power to different localities in different directions.

The distribution line carries 3-phase electricity from substation to different localities. The 3-phase electricity is branched out to serve the industries and then tapped out to 3 single-phase lines. The voltage in each single-phase line is stepped down to 220V to meet the household needs.

Market Participants

There are many market participants involved in the electricity market to ensure reliability:

- Power Generator

- Transmission Owner

- Distribution Owner

- System Operator

- Electricity Retailer

- Consumers

Generators submit offers to sell electricity and consumers submit bids to buy electricity. Since, household, commercial and small industries may not have capability to participate in the electricity market, they are represented by the retailers. In many cases, the bids to buy electricity is replaced by load forecast done by the System Operator. System Operator is responsible to balance the demand and supply. The transmission and distribution owners are responsible for maintaining their corresponding infrastructure.

Dispatch and Electricity Market

A day is divided into several dispatch periods. Usually, there are 48 dispatches in a day with each dispatch period lasting for 30min. In an advanced electricity market, the dispatch period could be as low as 5min. The System Operator (SO) forecasts the load during each dispatch period. The power generators then submit their offers on the electricity exchange.

The following example illustrates the mechanism of electricity market:

- The UK SO forecasts the load for 1st Jan as 28,659MW during the dispatch period of 00:00 – 00:30 AM. This forecast was done days in advance and the UK electricity exchange (APX) was open to offers from the generators.

- 31st Dec 2014 12:00:00 (Noon) - As APX is a Day Ahead Market, it closes for all dispatch periods pertaining to 1st Jan.

- 31st Dec 2014 23:00:00 - The SO prepares for the dispatch period of 1st Jan 2015 00:00 – 00:30 AM. He examines the following offer in the ascending order of offer price:

It can be concluded that generators A, B, C and D would be contributing 27,000MW and generator E would contribute 1,659MW to meet the demand of 28,659MW. The price of last MWh of electricity used to meet demand is called System Marginal Price (SMP), which is GBP 18/MWh in the above case.

- 1st Dec 2015 00:00:00 – The corresponding dispatch signals are sent to the respective generators.

- All the generators are paid SMP for each MWh of electricity they generated during the dispatch period, irrespective of their offer price. The payment of SMP to the generator (and not their offer price) encourages them to post the offer price which reflects their true operational cost of producing electricity. Similarly, each customer is charged SMP for every MWh of electricity consumed.

Balancing Supply and Demand

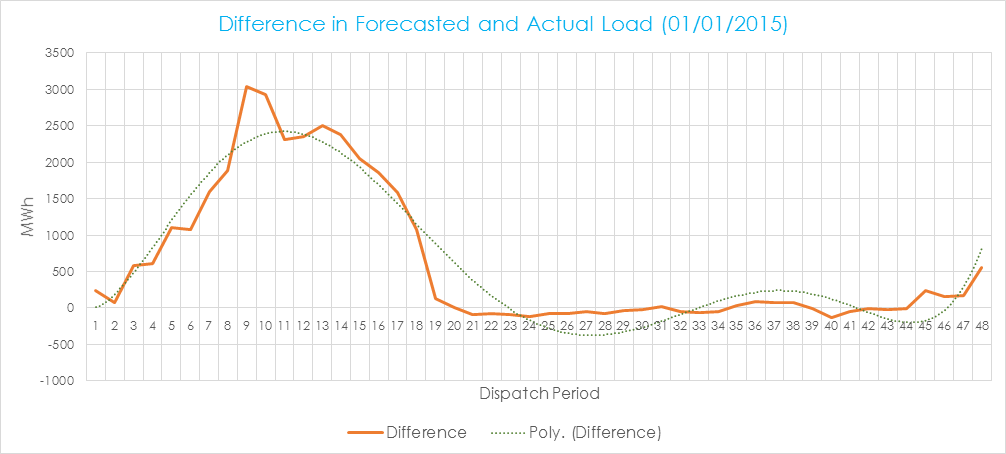

The forecasted load closely follows the pattern of actual electricity demand. However, it is impossible to exactly meet the demand on second-by-second basis using the forecast model.

The difference in the actual load and energy procured from the electricity market has to be balanced by different mechanisms. The smoothed out curve (along the difference plot) is met through a Load Following Power Generation Unit. The load followers have synchronous motors which gradually increase or decrease their power output to maintain the frequency of the system.

Whenever there is a surge in demand that cannot be met by the load follower, the SO sends a dispatch signal to a regulator which ramps up its production within 5 seconds and keeps the frequency of the system from falling.

In a nutshell, there are three mechanisms used by SO to meet the demand:

- Energy Dispatch

- Load Follower

- Regulation

In case the energy dispatch is met by renewable energy, the differences are onerous due to the intermittency of wind and solar power generators. To ensure the system security, the amount of regulation needed in such cases are huge.

Contingency and Reserves

There are occasional generator failures which can jeopardize the security of the system. The SO should have prudency in this regard and are mandated to have mechanism at place to deal with such situations. In addition to electricity market, SO also manages the reserve market. Reserves are unused capacity that are required to be brought online in case of any contingency.

- Spinning Reserve – These are already running generators that can quickly ramp up their production to meet any unscheduled outage. They are further classified as primary or secondary response depending on their response time (5s to 30s).

- Operating Reserve – These are offline generators that can start their production in minutes to meet any unforeseen failure of generating unit. They should be able to sustain their production for upto 2 hours.

Ancillary Services

The Ancillary Service is a very broad term representing services that are essential to maintain grid stability and security. They can be classified into the following categories:

- Frequency Control Ancillary Services – The spinning or operating reserves required to meet any unforeseen outage.

- Voltage Control Ancillary Services – Frequency is a system constant whereas voltage is a localized parameter which needs to be controlled within the prescribed standards of a particular electrical component. The devices used to control voltages in certain parts of the system fall under ancillary services.

- Power Control Ancillary Services – These devices are similar to voltage control and prevents excessive power from reaching a particular electrical component.

- System Restart Ancillary Services – Whenever the frequency of the system breaches the tolerance level, the generators may disengage from the grid and shut down. This may lead to a complete or partial blackout. The system restart is not easy because these generators may not have the capability to restart and re-engage with the grid until the frequency normalcy is restored. System Restart Ancillary Services are such generators that help the system to restart and reach the desired frequency range.

Inter-Regional Trade and Congestion

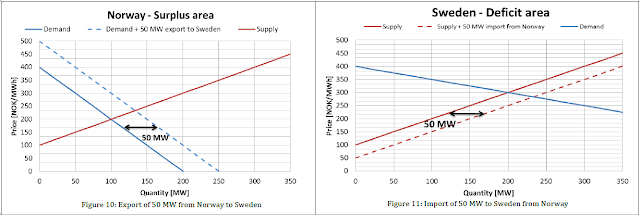

An electricity pool can be comprised of several regions connected by high voltage transmission lines. In case of Nord Pool, it is called interconnectors. Let us take Norway and Sweden as an example of two interconnected regions in the Nord Pool. On 1st Jan 2015 00:00, Sweden's excessive demand for electricity lead to a high price of 300 NOK/MWh, whereas Norway having supply surplus had a low price of 200 NOK/MWh.

In case there was no transmission line connecting Norway to Sweden, the electricity price would have remained as determined in their respective regional power exchange. In case there was no physical limit on amount of power carried by the interconnector, the prices would exactly be the same for Norway and Sweden.

The real case is in the middle of the above two extremes, with a certain physical limit on the interconnector. The new regional prices were re-calculated as below.

Demand Response

We can observe a peak in demand at around 18:00 (36th dispatch period). It is relatively expensive to meet this peak demand as compared to the baseload demand. Peak load leads to congestion and higher transmission losses as well. According to Berkeley National Laboratory, 10% of costs incurred in building and operating electricity infrastructure is to meet demands that occur less than 1% of the time. The study also finds that 5% reduction in peak demand can save upto $2.5billion/year.

In a conventional electricity system, there is negligible demand side participation in electricity market. This demand inelasticity leads to procurement of services that are expensive and environmentally taxing.

The idea of demand response is to build a smart grid where the iron curtain is removed and consumers are exposed to dynamic pricing. The new system will bring elasticity in electricity demand and encourage consumers to either increase their energy efficiency or shift their load from the period of peak demand to the period where prices are low.

Conclusion

The System Operator plays a pivotal role in ensuring the reliability of electricity in our society by:

- Forecasting the electricity demand

- Analyzing transmission and distribution losses

- Determining locational prices and system congestion

- Sending dispatch signals to the generation units

- Managing ancillary services market to ensure system stability

The mechanism by which the electricity reaches our home is mired in several complexities which is going to increase because of integration of intermittent power generating units to the grid. This intricacy is compounded by the inelasticity of demand. A major revolution in the form of smart grid is required to adhere to our growing demand for electricity. This would require a major investment in electricity infrastructure.

The revolution of shifting away from fossil fuels to renewable energy cannot come without the reinforcement of the electricity infrastructure.

Bibliography

- Fundamentals of Power System Economics - Daniel Kirschen and Goran Strbac

- AEMO

- Nord Pool

- Berkeley National Laboratory

- Clarke Science Center

Data Sources